Production-Ready AI Agents: The 2026 Reality Check

What enterprises are actually learning about deploying AI agents in production. Governance frameworks, failure patterns, and the uncomfortable truth about why most pilots fail.

Walk into any engineering subreddit or attend a tech conference in early 2026, and you will hear the same refrain: "Our AI agents are in production." But beneath that surface confidence lies a different story. Fifty-seven percent of enterprises do claim agents running in production. Yet only thirty percent of those have systems delivering measurable return on investment.

The gap between a working demo and a reliable production system is where projects fail.

This is not a technology problem. The model layer is not the bottleneck. The real failures come from governance, memory management, tool overload, and broken architectural assumptions that look fine on a laptop but collapse under real workload.

This article decodes what enterprises are actually learning from production deployments in 2026: the governance frameworks that work, the failure patterns that repeat, and the path to reliable autonomous workflows.

Why Most AI Agents Fail: The Real Root Causes

Three years of hype promised that large language models would become the foundation for autonomous systems. The implied narrative was simple: give the model a goal, tools, and context, and it would solve problems reliably.

Production reality has been humbling.

Forty percent of multi-agent pilots fail within six months of deployment. But failure is not random. It follows predictable patterns.

The Three Traps That Kill Production Agents

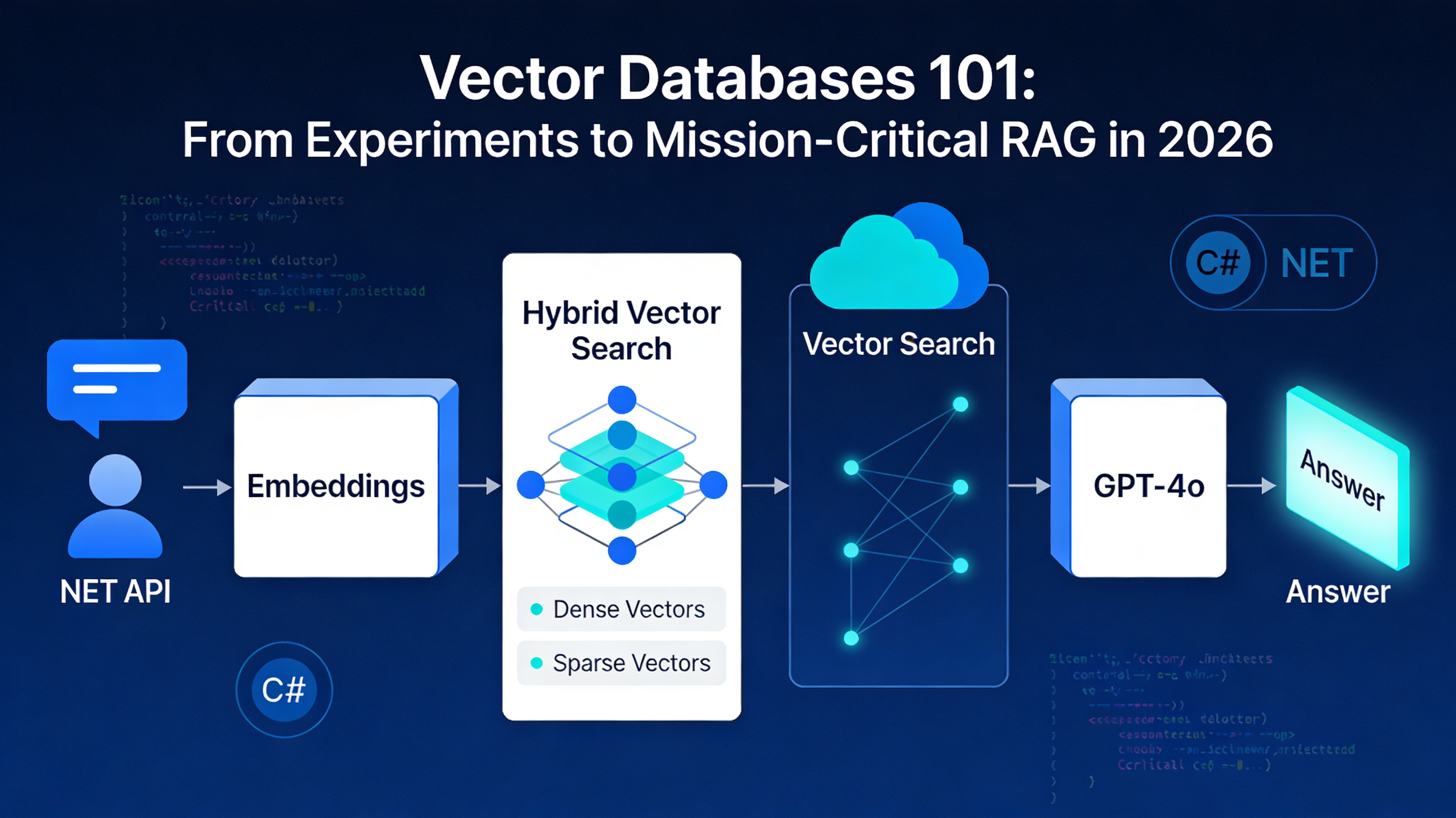

Trap 1: Dumb RAG (Retrieval-Augmented Generation)

Most teams dump everything they have into the agent's context window: entire documents, full conversation histories, raw API responses, and unfiltered database results. The agent drowns in noise.

The symptom appears as context window amnesia. The agent forgets its original objective halfway through a five-step workflow. It loses track of what it has already attempted. It begins reasoning about irrelevant details because they occupy more tokens than the actual task.

The root cause is architectural. You are treating context as storage. You are not managing what the agent actually needs to reason at each step.

The fix is explicit state management. Track what the agent has accomplished. Maintain a structured log of tool outputs. Feed the agent only the signals relevant to its current reasoning step, not the entire history.

Trap 2: Brittle Connectors

A production agent is useless without reliable access to external systems. In practice, this means API integrations that handle authentication, rate limiting, error recovery, and schema validation.

Most teams build this hastily. OAuth tokens are cached but not refreshed. Retry logic does not implement exponential backoff. When an API fails, the agent retries indefinitely or cascades the failure silently.

A single broken integration becomes a production incident. The agent either stalls or makes decisions based on missing data.

The fix requires treating integrations as a first-class concern. Implement circuit breakers. Validate API schemas at runtime. Build fallback paths so the agent can degrade gracefully.

Trap 3: The Polling Tax

When you lack event-driven architecture, agents poll constantly: "Is the order ready? How about now?" This wastes ninety-five percent of your API quota and never achieves real-time responsiveness.

Event-driven design is the alternative. The agent subscribes to system events and reacts when conditions change. This scales. It respects rate limits. It costs less.

The Governance Framework That Works: Observe, Reason, Act, Learn

Enterprises that have moved past pilot projects follow a consistent pattern. They do not just deploy agents; they govern them.

The framework has four steps:

Observe: The agent continuously monitors system state. It collects signals from APIs, databases, user inputs, and business rules. Observation is not passive. It is active filtering.

Reason: The agent analyzes the signal and determines what should happen next. This is where the LLM kernel operates. But reasoning is bounded. The agent reasons within guardrails: authorized tool calls only, defined decision boundaries, approved escalation paths.

Act: The agent executes its decision within predefined authority limits. A payment processing agent might approve transfers below a threshold but escalate above it. A support agent might resolve common issues but escalate complex disputes to a human.

Learn: The agent's decisions are logged, evaluated, and fed back. Over time, the system improves its accuracy and cost efficiency. Importantly, learning is continuous, not a static model freeze.

This framework is not new. It is how humans operate in regulated industries. Finance, healthcare, and government have governed autonomous decision-making for decades. AI agents are finally making that governance visible.

The enterprises doing this well treat governance as a feature, not a compliance burden. Governance enables faster deployment because it surfaces failures early. It reduces risk because actions are auditable.

What Production-Ready Actually Means

The term "production-ready" is abused. Most vendors use it to mean "works in a test harness." That is not production-ready.

Production-ready means:

The agent completes three to five minute workflows reliably most of the time. Reliability here means eighty-five to ninety-five percent success rate on the first attempt. This is not a chatbot. This is an autonomous workflow.

The agent operates within strict resource bounds. It does not consume unlimited tokens. It does not retry indefinitely. It respects latency budgets. For a five-minute workflow, each step might have a two-minute timeout.

Failures are observable. Every decision, every tool call, every failure is logged with enough detail to understand why it happened. You can trace a failure back to model behavior, tool integration, or data quality issues.

The agent degrades gracefully. When it hits a limit or encounters an unknown situation, it escalates to a human. It does not fail silently. It does not retry endlessly.

Humans remain in the loop where it matters. The agent recommends; a human approves. The agent acts within guardrails; humans audit. The agent learns from feedback; humans validate that learning is correct.

The Observable Metrics That Matter

You cannot govern what you cannot measure.

Most teams instrument traditional metrics: latency, error rates, throughput. These tell you whether the system is running. They do not tell you whether the agent is working correctly.

Production agents require agent-specific metrics:

Latency across decision steps: A five-minute workflow has multiple steps. Is the agent spending ninety seconds reasoning about which tool to use? That is a signal. Is one tool integration consistently slow? Track it per tool.

Error rates by failure mode: Not all errors are equal. An API timeout is different from a tool invocation that returned invalid data. Track both separately so you can target fixes.

Token usage and cost: This is where AI-first systems differ from traditional software. Each inference costs money. Retries, expanded context windows, and tool chain complexity all inflate costs. For a task that should use one thousand tokens, using five thousand tokens is a cost multiplier and a signal of inefficiency.

Output quality and compliance: Is the agent producing accurate decisions? Is it following guardrails? Is it hallucinating? Quality metrics require human labeling early, then automated evaluation once you have baselines.

Behavioral drift: Over time, agent behavior changes. Model updates, data changes, and production feedback all shift behavior. Behavioral drift that violates guardrails is a failure you need to catch before users do.

These metrics require observability infrastructure. Traditional monitoring was not built for non-deterministic systems. You need to instrument prompts, tool calls, and decision trees as first-class signals.

Implement this early. If you wait until production, you will be debugging with incomplete data.

Real Enterprise Outcomes in 2026

The data is starting to emerge on what actually works.

Enterprises running reasoning-based document processing (intelligent document processing with agentic workflows) report ninety-eight percent accuracy compared to ninety percent manual accuracy. More importantly, they report seventy to eighty percent time reduction and return on investment within three to six months.

This is because document processing workflows are well-defined. The agent has clear objectives. The tool set is bounded. The success criteria are measurable.

SaaS companies deploying AI-generated code report that eighty percent of code flowing into production is AI-generated. The critical caveat: after addressing quality concerns. The upfront velocity gain is real. The maintenance burden is real too. Teams that succeed treat AI-generated code as needing stricter validation, not less.

Enterprises deploying multi-step workflows in finance and operations report cost reductions in the range of forty to sixty percent for the target process. But this requires strict governance. Without it, cost overruns and quality failures offset the efficiency gain.

The Australian Regulatory Context

If you are building agents for Australian organizations, regulatory requirements are no longer optional.

APRA (the Australian Prudential Regulation Authority) now explicitly requires financial institutions to ensure third-party AI systems meet security and governance standards. CPS 234 is the standard. It is not abstract. It requires auditable governance.

The Australian Privacy Principles require that personal data stays within Australian data centers. Processing residency is critical, not just storage residency. If your AI agent routes inference through overseas providers, you likely violate privacy obligations.

Government agencies must maintain an internal register of all AI use cases as of June 2026. For agencies considering agentic systems, this means documenting the agent's purpose, boundaries, tool access, escalation paths, and audit trails.

If you are implementing agents for Australian organizations, these are not future concerns. They are active requirements now.

Building Production Agents with Azure and Semantic Kernel

If you are building on Microsoft's stack, the technical path is clear.

Semantic Kernel provides the orchestration layer. It handles prompting, tool management, and agentic patterns. The ChatCompletionAgent class is the foundation. It inherits memory management, tool invocation, and loop control.

For Australian deployments, use Azure OpenAI with region-locking to Australia East or Australia Southeast. This ensures processing stays onshore.

A practical pattern:

Define your agent with explicit instructions and bounded tool sets. Do not give agents access to twenty tools. Start with three to five. Add tools only after validating the core workflow.

Implement structured state management. Instead of relying on chat history, maintain an explicit state object tracking progress, completed steps, and tool outcomes.

Use the ReAct framework (Reason-Act-Observe) explicitly. The agent verbalizes its reasoning, takes an action, observes the outcome, and decides whether to continue. This makes agent behavior interpretable.

Set iteration limits. A well-designed agent should complete its task in ten to fifteen decision steps. If it requires fifty steps, the workflow is too complex or the agent lacks a necessary tool.

Implement observability from day one. Log every tool call, every LLM invocation, every decision. Use structured logging so you can query and analyze agent behavior after deployment.

Test failure modes before production. What happens if a tool is unavailable? What happens if the LLM times out? What happens if the agent hits its iteration limit? Build fallback paths for each.

The Barbell Effect: Where Real Value Lives

AI has commoditized routine software. A CMS, a basic dashboard, a simple integration: these can now be generated in days rather than months.

This shifts engineering economics fundamentally.

The valuable software is at the extremes. On one end is commodity code that is cheap to generate and easy to copy. On the other is highly specialized code for regulated, mission-critical, or deeply integrated systems.

The middle is shrinking.

For AI agents, this means the strategic value lies in:

Deep domain expertise (healthcare compliance, financial regulations, industry-specific rules). Generic agents cannot encode this. Agents that do command premium value.

Proprietary data and workflows. An agent trained on your specific business processes and data is hard to replicate. This is defensible value.

Custom integration logic. Agents that connect your specific systems, handle your specific handoff protocols, and operate within your specific constraints are not commodities.

Extreme performance or cost optimization. Where latency, throughput, or cost matter critically, agents need expert design.

For CTOs and engineering leaders, this is the implication: do not use AI agents to build what the market treats as commodity software. Use them to augment the twenty percent of systems where differentiation, compliance, and integration truly matter.

The 2026 Deployment Checklist

Before moving any agentic system to production:

Governance: Define the agent's purpose, authorized tool access, decision boundaries, and escalation paths. Document this. Make it auditable.

State Management: Do not rely on chat history as memory. Build explicit state tracking. Validate that the agent maintains context correctly across long workflows.

Observability: Instrument prompts, tool calls, decisions, and outcomes. You cannot debug what you cannot see.

Failure Modes: Test what happens when tools fail, timeouts occur, and the agent hits limits. Build graceful degradation.

Regulatory Alignment: If handling personal data, ensure data residency. If in regulated industries, align with sector-specific requirements. For Australian deployments, ensure APRA or Privacy Principles compliance.

Human Oversight: Define where humans review decisions. Not every step, but the ones that matter.

Continuous Monitoring: After deployment, continuously monitor agent behavior. Watch for cost creep, quality degradation, and drift from intended behavior.

The Path Forward

The narrative around AI agents has shifted from "will this work?" to "how do we make this work reliably?" This is progress.

Enterprise deployments in 2026 show that agentic AI works for well-defined, bounded, measurable workflows. It does not work for vague objectives, tool overload, or governance-averse organizations.

The organizations winning with AI agents are treating them as systems, not magic. They are building observability early. They are limiting scope ruthlessly. They are testing failure modes. They are treating governance as an accelerator, not a constraint.

For engineering leaders, the imperative is clear: if you have not started planning production agent deployments, the question is no longer whether, but how to do it safely. The competitive advantage goes to teams that can deploy reliably, not teams that deploy fast.

Related Reading

- Autonomous AI Agents in 2026: From Automation to Agentic Intelligence - The broader landscape of agentic AI and a 90-day implementation roadmap for enterprises.

- Building AI Agents with n8n: Complete 2026 Implementation Guide - Hands-on guide to architecting AI agents with n8n, LangChain integration, and workflow automation patterns.

- n8n vs Make vs Zapier: Enterprise Automation Decision Framework for 2026 - Platform comparison covering cost, data sovereignty, and compliance for Australian enterprises.

- How to Deploy n8n on Azure VMs: A Complete Production Setup Guide - Step-by-step Azure deployment with Docker, security hardening, and automated backups.

Ready to Build Production-Ready Agents?

At Hrishi Digital Solutions, we specialize in governing complex AI deployments for mission-critical systems. We have guided enterprises through agentic AI pilots, built observability infrastructure, and integrated agents with legacy systems while maintaining regulatory compliance.

Whether you are exploring agentic patterns for the first time or scaling agents across your organization, we understand the technical and governance challenges specific to Australian enterprises.

Request a free architecture review to assess your agent deployment readiness and identify the governance framework and implementation path that fits your systems. We will provide a concrete recommendation on phasing, risk mitigation, and realistic success metrics.

Contact our team to discuss how to position your organization to deploy production-ready AI agents with confidence.

Hrishi Digital Solutions specializes in enterprise digital transformation, cloud architecture, and AI integration for mission-critical systems. Based in Australia, we serve government, finance, and regulated industries where reliability, compliance, and performance are non-negotiable.

Hrishi Digital Solutions

Enterprise digital solutions provider specializing in AI integration, cloud architecture, and mission-critical systems.

Contact Us →