Building with Claude & .NET: A Developer's Guide to AI Integration

Master Claude AI integration with .NET. Learn direct API calls, streaming, multi-turn conversations, and prompt engineering patterns with production-ready code examples for full-stack developers.

Artificial intelligence has moved from experimental research to practical, production-grade enterprise applications. For .NET developers and architects, integrating large language models (LLMs) into your systems opens transformative possibilities-from intelligent customer support to sophisticated code and data analysis.

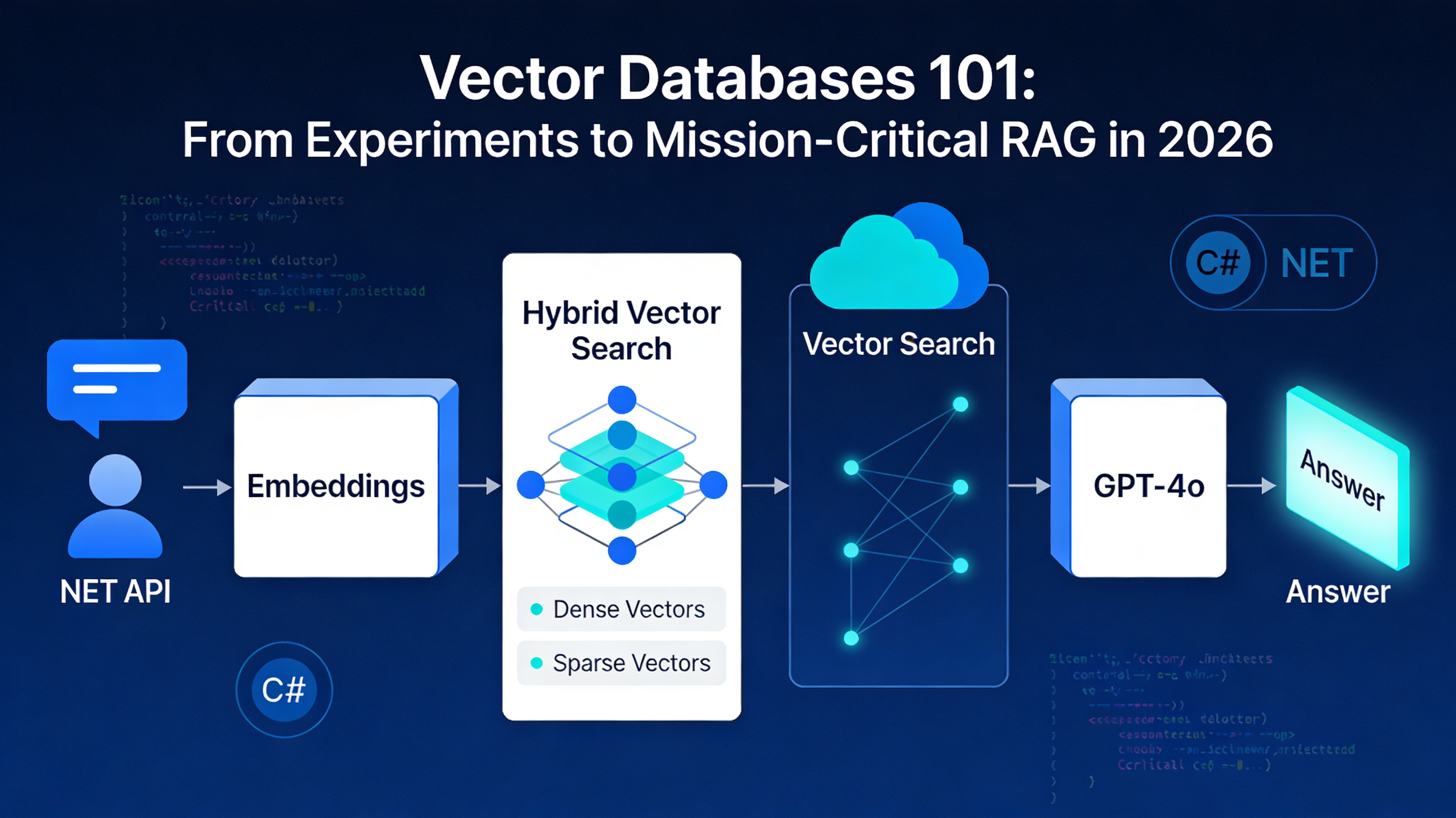

Yet the integration landscape remains fragmented, with numerous options and patterns to navigate. This guide provides a comprehensive, practical approach to integrating Claude AI with .NET using the official Anthropic C# SDK, covering everything from foundational API calls to advanced patterns like streaming responses and multi-turn conversations. For workflow-level automation with Claude, see our guide on Claude Code Skills for .NET developers.

We've included production-ready code examples that you can implement immediately in your projects.

The AI Integration Landscape: Why Claude for .NET Developers?

The market now offers multiple LLM options for .NET integration, each with distinct strengths. Understanding the landscape helps you make informed architectural decisions for your applications.

OpenAI's GPT Models remain the most widely adopted choice, offering mature SDKs and extensive community support. However, they come with considerations around data privacy, API costs, and rate limiting for enterprise deployments.

Google's Gemini provides strong multimodal capabilities and competitive pricing, with particularly good integration for organizations already embedded in the Google Cloud ecosystem.

Anthropic's Claude differentiates itself through constitutional AI training, leading to more thoughtful responses, superior reasoning capabilities, and robust safety properties. Claude demonstrates lower hallucination rates on factual queries and excels at nuanced prompt engineering scenarios-critical for enterprise applications where accuracy directly impacts business outcomes.

Open-source alternatives like Llama or Mistral offer self-hosting options but require significant infrastructure investment and ongoing maintenance overhead that often exceeds their cost benefits for growing organizations.

Claude vs. Competitors: Why It Matters for .NET Developers

When selecting an LLM platform, .NET architects should evaluate across multiple dimensions beyond raw API capability.

| Feature | Claude | GPT-4o | Gemini |

|---|---|---|---|

| Context Window | 200K tokens | 128K tokens | Up to 2M tokens |

| Cost (per 1M tokens) | $3/$15 input/output | $5/$15 | Varies by tier |

| Reasoning Capability | Excellent | Excellent | Very good |

| Constitutional AI | Yes (built-in safety) | Requires prompting | No |

| Streaming Support | Yes | Yes | Yes |

| .NET SDK | Official SDK ✅ | Excellent | Good |

| Enterprise SLA | Available | Available | Available |

| Data Privacy | Strong guarantees | Requires agreement | Google terms |

Claude's 200K context window proves invaluable for scenarios involving lengthy documents, entire codebase analysis, or comprehensive conversation histories. The constitutional AI approach means fewer workarounds for safety guardrails-they're inherent to the model rather than bolted-on through prompting.

For .NET teams, this translates to fewer hours spent fighting the model and more time building features. The cost structure also scales better for high-volume applications, particularly when leveraging the Haiku model for cost-sensitive workloads.

Setting Up: Installation with Official SDK

Good news: Anthropic now provides an official C# SDK. As of December 2025, the Anthropic package (version 10+) is Anthropic's official beta SDK for .NET developers.

Install the Official SDK

dotnet add package Anthropic

Verify the installation was successful:

dotnet package list

You should see the Anthropic package listed (version 10+).

Configure API Key

Create an API key at console.anthropic.com. Set it as an environment variable (never hardcode credentials in production):

# macOS/Linux

export ANTHROPIC_API_KEY="your-key-here"

# Windows PowerShell

$env:ANTHROPIC_API_KEY="your-key-here"

# Windows Command Prompt

set ANTHROPIC_API_KEY=your-key-here

In production, use Azure Key Vault, AWS Secrets Manager, or similar secrets storage.

Pattern 1: Direct API Calls - Your Foundation

The simplest integration pattern executes a single API call and awaits the complete response. This works well for non-time-sensitive operations like batch processing or one-shot queries.

using Anthropic;

// Initialize the client (reads from ANTHROPIC_API_KEY environment variable)

var client = new AnthropicClient();

// Create a message request

var response = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022", // Latest Claude 3.5 model

MaxTokens = 1024,

Messages = new List<MessageParam>

{

new MessageParam

{

Role = MessageRole.User,

Content = "Explain the concept of dependency injection in .NET in two sentences."

}

}

});

// Extract the response text

var responseText = response.Content[0].Text;

Console.WriteLine(responseText);

// Monitor token usage (critical for cost tracking)

Console.WriteLine($"Input tokens: {response.Usage?.InputTokens}");

Console.WriteLine($"Output tokens: {response.Usage?.OutputTokens}");

// Calculate cost for Sonnet 3.5: $3 per million input, $15 per million output tokens

if (response.Usage != null)

{

var inputCost = (response.Usage.InputTokens / 1_000_000.0) * 3.0;

var outputCost = (response.Usage.OutputTokens / 1_000_000.0) * 15.0;

Console.WriteLine($"Estimated cost: ${(inputCost + outputCost):F4}");

}

This pattern establishes the basic contract: you send a CreateMessageRequest with a model identifier, maximum tokens to generate, and a list of messages, then receive a Message object containing the response.

Key benefits:

- Simple and straightforward

- Perfect for batch operations

- Full response guaranteed

- Easy cost calculation

Pattern 2: Streaming Responses - Real-Time User Experiences

Direct API calls feel slow in interactive applications. Streaming returns tokens incrementally as Claude generates them, dramatically improving perceived performance.

Imagine a code review assistant that shows analysis as it happens rather than waiting for complete analysis:

using Anthropic;

var client = new AnthropicClient();

var response = await client.Messages.StreamAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 2048,

Messages = new List<MessageParam>

{

new MessageParam

{

Role = MessageRole.User,

Content = @"Review this C# method for performance issues:

public List<User> GetActiveUsers(List<User> allUsers)

{

var result = new List<User>();

foreach(var user in allUsers)

{

if(user.IsActive && user.LastLogin > DateTime.Now.AddDays(-30))

{

result.Add(user);

}

}

return result;

}"

}

}

});

// Stream tokens as they arrive

await foreach (var streamMessage in response)

{

if (streamMessage.Type == "content_block_delta")

{

var delta = streamMessage as ContentBlockDeltaEvent;

if (delta?.Delta is TextDelta textDelta)

{

// Write each token fragment without newline for real-time display

Console.Write(textDelta.Text);

}

}

}

Console.WriteLine(); // Final newline

Streaming is crucial for web applications where users expect progressive feedback. A 10-second stream feels responsive; a 10-second wait is frustrating.

Here's a more sophisticated pattern that buffers tokens into complete words before UI updates:

using Anthropic;

using System.Text;

var client = new AnthropicClient();

var response = await client.Messages.StreamAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

Messages = new List<MessageParam>

{

new MessageParam

{

Role = MessageRole.User,

Content = "Write a haiku about .NET"

}

}

});

var tokenBuffer = new StringBuilder();

var completeResponse = new StringBuilder();

await foreach (var streamMessage in response)

{

if (streamMessage.Type == "content_block_delta")

{

var delta = streamMessage as ContentBlockDeltaEvent;

if (delta?.Delta is TextDelta textDelta)

{

tokenBuffer.Append(textDelta.Text);

completeResponse.Append(textDelta.Text);

// Emit complete words for UI updates

string text = tokenBuffer.ToString();

int lastSpace = text.LastIndexOf(' ');

if (lastSpace >= 0)

{

string completeWords = text[..lastSpace];

OnTokensReceived(completeWords);

tokenBuffer.Clear();

tokenBuffer.Append(text[(lastSpace + 1)..]);

}

}

}

}

// Handle remaining buffered text

if (tokenBuffer.Length > 0)

{

OnTokensReceived(tokenBuffer.ToString());

}

void OnTokensReceived(string tokens)

{

// Update UI, log, or send to client

Console.WriteLine(tokens);

}

Pattern 3: Multi-Turn Conversations - Building Context

Most intelligent applications require multi-turn exchanges where context accumulates. Claude maintains conversation state through a messages list, where each message alternates between user and assistant roles.

using Anthropic;

var client = new AnthropicClient();

var conversationHistory = new List<MessageParam>();

// Turn 1: User's first message

conversationHistory.Add(new MessageParam

{

Role = MessageRole.User,

Content = "I'm building a real-time notification system in .NET. What should I consider?"

});

var response1 = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

Messages = conversationHistory

});

string assistantResponse1 = response1.Content[0].Text;

conversationHistory.Add(new MessageParam

{

Role = MessageRole.Assistant,

Content = assistantResponse1

});

Console.WriteLine($"Assistant: {assistantResponse1}\n");

// Turn 2: User's follow-up question

conversationHistory.Add(new MessageParam

{

Role = MessageRole.User,

Content = "What about handling high throughput scenarios? I expect 100k+ notifications per minute."

});

var response2 = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

Messages = conversationHistory

});

string assistantResponse2 = response2.Content[0].Text;

conversationHistory.Add(new MessageParam

{

Role = MessageRole.Assistant,

Content = assistantResponse2

});

Console.WriteLine($"Assistant: {assistantResponse2}\n");

// Turn 3: Claude maintains full context

conversationHistory.Add(new MessageParam

{

Role = MessageRole.User,

Content = "Which of these approaches scales better with SignalR?"

});

var response3 = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

Messages = conversationHistory

});

Console.WriteLine($"Assistant: {response3.Content[0].Text}");

This pattern maintains conversation context for users, enabling Claude to reference earlier statements and build coherent multi-step reasoning. For production applications, persist the conversation history to a database so conversations survive service restarts.

Pattern 4: System Prompts and Context Engineering

System prompts define Claude's role and behavior throughout a conversation. This is where you embed your domain expertise and ensure consistent, appropriate responses.

using Anthropic;

var client = new AnthropicClient();

var systemPrompt = @"You are an expert .NET security architect with 15+ years of enterprise experience.

Your role is to provide security guidance for .NET applications, focusing on OWASP Top 10 vulnerabilities,

Azure security best practices, and compliance frameworks like PCI-DSS and SOC 2.

When analyzing code or architecture:

1. Identify specific vulnerabilities with CVE references where applicable

2. Provide remediation code examples

3. Explain the business impact of the vulnerability

4. Recommend preventive measures for future development

Always maintain an educational tone and avoid alarmist language while emphasizing severity where warranted.

Cite official Microsoft documentation and OWASP guidelines.";

var response = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

System = systemPrompt, // System prompt shapes behavior

Messages = new List<MessageParam>

{

new MessageParam

{

Role = MessageRole.User,

Content = @"Is this connection string approach secure?

var connString = ConfigurationManager.ConnectionStrings[""DefaultConnection""].ConnectionString;

using (var connection = new SqlConnection(connString))

{

connection.Open();

}"

}

}

});

Console.WriteLine(response.Content[0].Text);

System prompts transform Claude from a general-purpose assistant into a specialized domain expert. The specificity of your system prompt directly correlates with response quality-vague system prompts yield vague responses. For a comprehensive deep-dive into crafting effective prompts, see our complete prompt engineering mastery guide.

Here's an advanced pattern combining system prompts with conversation history:

public class AICodeReviewer

{

private readonly AnthropicClient _client;

private readonly List<MessageParam> _conversationHistory;

private const string SYSTEM_PROMPT = @"You are a meticulous .NET code reviewer. Evaluate code for:

- Performance (LINQ queries, allocations, caching)

- Security (injection risks, authentication, sensitive data)

- Maintainability (naming, complexity, documentation)

- .NET best practices and idioms

Format reviews as structured feedback with severity levels (Critical, High, Medium, Low).";

public AICodeReviewer()

{

_client = new AnthropicClient();

_conversationHistory = new List<MessageParam>();

}

public async Task<string> ReviewCodeAsync(string code)

{

_conversationHistory.Add(new MessageParam

{

Role = MessageRole.User,

Content = $"Please review this code:\n\n```csharp\n{code}\n```"

});

var response = await _client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 2048,

System = SYSTEM_PROMPT,

Messages = _conversationHistory

});

string review = response.Content[0].Text;

_conversationHistory.Add(new MessageParam

{

Role = MessageRole.Assistant,

Content = review

});

return review;

}

public async Task<string> AskFollowUpAsync(string question)

{

_conversationHistory.Add(new MessageParam

{

Role = MessageRole.User,

Content = question

});

var response = await _client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 2048,

System = SYSTEM_PROMPT,

Messages = _conversationHistory

});

string answer = response.Content[0].Text;

_conversationHistory.Add(new MessageParam

{

Role = MessageRole.Assistant,

Content = answer

});

return answer;

}

}

// Usage

var reviewer = new AICodeReviewer();

var review = await reviewer.ReviewCodeAsync("public void Process(List<int> items) { ... }");

Console.WriteLine(review);

var followUp = await reviewer.AskFollowUpAsync("How would you optimize the LINQ query for large datasets?");

Console.WriteLine(followUp);

Common Integration Mistakes and How to Avoid Them

Integrating LLMs introduces subtle failure modes that don't exist in traditional application development.

Mistake 1: Ignoring Token Limits in the 200K Context Window

The 200K token limit feels infinite until you hit it. One common error involves loading entire application logs or documentation without checking length:

// ❌ DANGEROUS: No length validation

var entireLogFile = System.IO.File.ReadAllText("application.log");

var response = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

Messages = new List<MessageParam>

{

new MessageParam { Role = MessageRole.User, Content = $"Analyze this log:\n\n{entireLogFile}" }

}

});

// ✅ CORRECT: Validate and summarize

string logContent = System.IO.File.ReadAllText("application.log");

int estimatedTokens = logContent.Length / 4; // Rough estimate

if (estimatedTokens > 150000)

{

// Use Claude to summarize the logs first

var summaryResponse = await client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 4096,

Messages = new List<MessageParam>

{

new MessageParam { Role = MessageRole.User, Content = $"Summarize these error patterns:\n\n{logContent[..50000]}" }

}

});

logContent = summaryResponse.Content[0].Text;

}

Mistake 2: Forgetting Error Handling and Rate Limits

Claude's API enforces rate limits. Production code must gracefully handle throttling:

// ❌ NAIVE: No retry logic

var response = await client.Messages.CreateAsync(request);

// ✅ ROBUST: Exponential backoff retry

public async Task<Message> CreateMessageWithRetryAsync(CreateMessageRequest request)

{

const int maxRetries = 3;

int delayMs = 1000; // Start with 1 second

for (int attempt = 0; attempt < maxRetries; attempt++)

{

try

{

return await _client.Messages.CreateAsync(request);

}

catch (HttpRequestException ex) when (ex.StatusCode == System.Net.HttpStatusCode.TooManyRequests)

{

if (attempt == maxRetries - 1) throw;

Console.WriteLine($"Rate limited. Retrying in {delayMs}ms...");

await Task.Delay(delayMs);

delayMs *= 2; // Exponential backoff

}

catch (HttpRequestException ex) when (ex.StatusCode == System.Net.HttpStatusCode.ServiceUnavailable)

{

if (attempt == maxRetries - 1) throw;

await Task.Delay(delayMs);

delayMs *= 2;

}

}

throw new InvalidOperationException("Max retries exceeded");

}

Mistake 3: Trusting Claude's Factual Assertions Without Verification

While Claude demonstrates lower hallucination rates than competitors, it's not infallible. Never use Claude's responses for security decisions, medical advice, or legal guidance without expert verification:

// ❌ RISKY: Using Claude for security validation

var securityAnalysis = await client.Messages.CreateAsync(...);

if (securityAnalysis.Content[0].Text.Contains("secure"))

{

approveForProduction = true; // DANGEROUS

}

// ✅ SAFE: Use Claude for analysis, human expertise for decisions

var securityAnalysis = await client.Messages.CreateAsync(request);

Console.WriteLine("Security analysis from Claude:");

Console.WriteLine(securityAnalysis.Content[0].Text);

Console.WriteLine("\nIMPORTANT: Have a security expert review before production deployment.");

Mistake 4: Not Monitoring API Costs in Production

An unchecked production deployment can cost hundreds or thousands unexpectedly:

public class CostTracker

{

private decimal _totalCost = 0;

private readonly object _lockObject = new object();

public void LogUsage(int inputTokens, int outputTokens)

{

// Claude 3.5 Sonnet pricing

const decimal SONNET_INPUT = 0.000003m; // $3 per million

const decimal SONNET_OUTPUT = 0.000015m; // $15 per million

decimal cost = (inputTokens * SONNET_INPUT) + (outputTokens * SONNET_OUTPUT);

lock (_lockObject)

{

_totalCost += cost;

// Alert if daily costs exceed threshold

if (_totalCost > 50m) // $50 daily threshold

{

Console.WriteLine($"⚠️ WARNING: Daily API costs exceed $50 (Current: ${_totalCost:F2})");

// Send alert to monitoring system

}

}

}

public decimal GetTotalCost() => _totalCost;

}

Performance and Cost Optimization Strategies

Integrating LLMs introduces new performance and cost considerations different from traditional APIs.

Model Selection by Use Case

Claude offers multiple models with different cost and performance characteristics:

- Claude 3.5 Sonnet: Best overall, $3/$15 per million tokens input/output. Use for complex reasoning, analysis.

- Claude 3 Haiku: Ultra-compact, $0.80/$4 per million tokens. Use for simple classification, extraction.

- Claude 3 Opus: Maximum capability, $15/$75 per million tokens. Use only when Sonnet insufficient.

public class SmartModelSelector

{

public string SelectModel(string taskType) => taskType.ToLower() switch

{

"classification" => "claude-3-5-haiku-20241022", // Simple decision, low cost

"code_generation" => "claude-3-5-sonnet-20241022", // Complex reasoning, good cost

"reasoning" => "claude-3-5-sonnet-20241022", // General purpose

"expert_analysis" => "claude-3-opus-20250219", // Maximum capability needed

_ => "claude-3-5-sonnet-20241022"

};

}

Reducing Token Usage Through Prompt Engineering

Fewer tokens mean lower costs and faster responses:

// ❌ VERBOSE: 150+ tokens

const string POOR_PROMPT = @"Please analyze the following code in detail and provide a comprehensive review

that covers every aspect of software engineering best practices including but not limited to

performance optimization, security vulnerabilities, code maintainability, naming conventions,

and design patterns. Make sure to explain why each issue is problematic.";

// ✅ CONCISE: 50 tokens, same result

const string GOOD_PROMPT = @"Review this C# code for:

- performance issues

- security risks

- maintainability problems

Return findings as a bullet list with severity.";

Caching Results for Repetitive Queries

Many applications ask Claude similar questions repeatedly. Caching prevents redundant API calls:

public class CachedClaudeService

{

private readonly IMemoryCache _cache;

private readonly AnthropicClient _client;

private const string CACHE_KEY_PREFIX = "claude_response_";

public CachedClaudeService(IMemoryCache cache)

{

_cache = cache;

_client = new AnthropicClient();

}

public async Task<string> GetAnalysisAsync(string query)

{

string cacheKey = CACHE_KEY_PREFIX + GenerateHash(query);

if (_cache.TryGetValue(cacheKey, out string cachedResult))

{

return cachedResult;

}

var response = await _client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

Messages = new List<MessageParam>

{

new MessageParam { Role = MessageRole.User, Content = query }

}

});

string result = response.Content[0].Text;

_cache.Set(cacheKey, result, TimeSpan.FromHours(24));

return result;

}

private static string GenerateHash(string input)

{

using var sha256 = System.Security.Cryptography.SHA256.Create();

var bytes = sha256.ComputeHash(System.Text.Encoding.UTF8.GetBytes(input));

return Convert.ToBase64String(bytes);

}

}

Building AI-First Applications: Architecture Patterns

Modern applications go beyond simple "ask Claude a question" patterns. AI-first architectures embed intelligence throughout the system.

Pattern: AI-Enhanced Search

Traditional search returns documents; AI-enhanced search understands intent and provides synthesized answers:

public class AIEnhancedSearch

{

private readonly AnthropicClient _client;

private readonly ISearchEngine _searchEngine;

public async Task<string> SearchWithInsightsAsync(string userQuery)

{

// Step 1: Execute traditional search

var searchResults = await _searchEngine.SearchAsync(userQuery);

string combinedResults = string.Join("\n", searchResults.Select(r => r.Content));

// Step 2: Let Claude synthesize results

var response = await _client.Messages.CreateAsync(new CreateMessageRequest

{

Model = "claude-3-5-sonnet-20241022",

MaxTokens = 1024,

System = "You are a helpful research assistant. Synthesize the provided search results into a coherent, accurate answer.",

Messages = new List<MessageParam>

{

new MessageParam

{

Role = MessageRole.User,

Content = $"User question: {userQuery}\n\nSearch results:\n{combinedResults}"

}

}

});

return response.Content[0].Text;

}

}

Decision Framework: Should You Integrate Claude Today?

Start with Claude if you have:

- Recurring manual tasks where Claude can accelerate-code generation, documentation, analysis, customer support classification, or content creation

- Projects with budgets that can absorb $200–500 monthly in API costs during development and scaling

- Teams comfortable with prompt engineering iteration and continuous optimization

Delay Claude integration if:

- Your application requires 99.99% uptime with zero external dependencies

- User data contains medical, financial, or highly sensitive information requiring on-premise processing

- The problem is better solved with deterministic code or structured decision logic

Start with a pilot rather than full integration:

- Create a small feature (e.g. an AI-assisted support helper or document analyzer) to validate value

- Monitor API costs, user adoption, and response quality

- Use pilot learnings to inform architecture for full-scale integration

Getting Started: Your Implementation Roadmap

Take these steps to begin integrating Claude into your .NET applications today:

Install the official SDK:

dotnet add package AnthropicSecure your API key and set environment variables following your organization's secret management practices

Start with a non-critical feature like an intelligent helper or analysis tool that doesn't block core functionality

Implement comprehensive error handling and cost monitoring before any production deployment

Build feedback loops where users rate response quality-this data drives prompt optimization

Iterate on system prompts based on real usage. Generic prompts yield generic responses; domain-specific prompts unlock Claude's potential

Monitor costs and performance systematically. Log token usage, API latency, and user engagement for every interaction

Plan for scale with caching, model selection strategies, and budget safeguards before user growth accelerates API costs

Explore AI Integration for Your Project

Claude integration transforms how your .NET team builds intelligent applications. Whether you're automating internal workflows, enhancing customer experiences, or building AI-first products, Claude's combination of capability, safety, and cost-efficiency makes it an excellent choice for .NET enterprises.

Ready to accelerate your AI integration journey? Hrishi Digital Solutions helps organizations architect, implement, and optimize AI solutions within existing .NET systems. Our AI integration and automation services provide end-to-end support for your AI transformation.

We can help you:

- Evaluate whether Claude or alternative LLMs align with your specific requirements and constraints

- Design integration architecture that scales efficiently and maintains cost control

- Implement production-grade patterns including error handling, monitoring, and security

- Optimize prompts and model selection to maximize response quality while minimizing costs

- Integrate Claude cleanly into existing .NET APIs, web apps, and cloud environments

contact our team to discuss your AI integration goals.

Disclaimer: Code examples are for illustrative purposes. Always verify the latest SDK documentation and pricing before deployment.

Hrishi Digital Solutions

Enterprise-grade digital solutions specializing in modern web development, cloud architecture, and AI integration.

Contact Us →